Hi, I am Martin and this article is about my journey optimizing Redis as key-value cache for millions of records. We have been using Redis from before, but never for such a big scale.

Redis is an awesome in-memory database. We use Redis as flexible cache for our Quant service. Redis has many data types to choose from but we will cover only String and Hash in this article as they are probably the most commonly used data types.

Redis Strings

The Redis String type is the simplest type of value you can associate with a Redis key. It is the only data type in Memcached, so it is also very natural for newcomers to use it in Redis.

Since Redis keys are strings, when we use the string type as a value too, we are mapping a string to another string. The string data type is useful for a number of use cases, like caching HTML fragments or pages.

I tried to use string as key-values store but got huge memory problems. We would have to scale up our EC2 instance resulting in increased bill and unanswered scalability questions. Common sense makes us think that using Redis’s String as plain key-value store is the way to go, but truth is that it is very memory hungry for storing hundred million records (however CPU efficient).

My optimization journey starts here:

I followed https://redis.io/topics/memory-optimization guide, but could not get satisfying results. I needed to improve memory consumption by 200%. Changing the Redis configuration file didn’t give me more than 10% performance boost. Changing data type from String to Hash surprisingly also didn’t help so much.

Redis Hashes

While hashes are handy to represent objects, actually the number of fields you can put inside a hash has no practical limits (other than available memory), so you can use hashes in many different ways inside your application.

It is worth noting that small hashes (i.e., a few elements with small values) are encoded in special way in memory that make them very memory efficient.

Use hashes when possible

Small hashes are encoded in a very small space, so you should try representing your data using hashes every time it is possible.

From <https://redis.io/topics/memory-optimization#use-hashes-when-possible>

I knew that Hash data type is the way to go but performance was very similar compared to String data type. Not 2-10x improvement as we have been promised. However I got encouraged by a blog post from Instagram’s engineers.

To take advantage of the hash type, we bucket all our Media IDs into buckets of 1000 (we just take the ID, divide by 1000 and discard the remainder). That determines which key we fall into; next, within the hash that lives at that key, the Media ID is the lookup key *within* the hash, and the user ID is the value. An example, given a Media ID of 1155315, which means it falls into bucket 1155 (1155315 / 1000 = 1155):

HSET “mediabucket:1155” “1155315” “939”

HGET “mediabucket:1155” “1155315”

> “939”

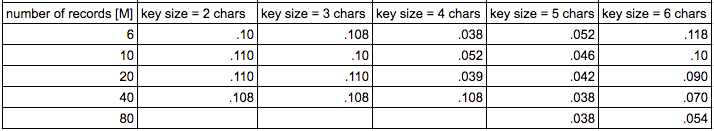

I used Hash with same key size (4 chars), but still didn’t get any promising results. However, there was one big difference. They use Integer keys, where we use UUID. I started to experiment with different key sizes for Hash and that was where I did hit our goal. I made a simple ruby script (Gist: https://gist.github.com/manfm/21106686dc8e281cf2a0470cb9fb4a1e) for generating millions of keys and ran that for different key sizes.

Results were promising:

Redis Hash memory allocation [GB]

Redis Hash memory allocation [GB]

Lets take these raw data and calculate the memory consumption per 1 million records:

Redis Hash memory allocation [GB] per 1 million records

Redis Hash memory allocation [GB] per 1 million records

And put that data into chart:

We can clearly see huge memory consumption difference for variety of key sizes. (Missing data for 80M records is where my workstation ran out of memory.)

Conclusion:

I could cut memory consumption by 65% (0.108->0.038) using Hash with 5 char key size. Yet I could not find the right Redis configuration values to optimize memory consumption even more.

Redis Hash memory performance optimization is still a little bit of magic for me though I am very satisfied with the results. If you plan to use Redis with millions of records, definitely run tests to see which key size is best for you.

More to read:

https://engineering.instagram.com/storing-hundreds-of-millions-of-simple-key-value-pairs-in-redis-1091ae80f74c#.7o2aw0dcp

http://highscalability.com/blog/2014/9/8/how-twitter-uses-redis-to-scale-105tb-ram-39mm-qps-10000-ins.html

https://redis.io/topics/memory-optimization